Workplace AI as a compliance matter

Artificial intelligence (AI) is and will continue to be a key topic and concern for legal and compliance professionals. Since AI has the capacity to automate processes that previously required careful human review and consideration, it is understandable that new AI-based tools tend to raise concerns from legal, ethical, and security perspectives. While evidence points to AI often making processes more efficient and minimizing risks of human errors, adoption of AI may entail concerns of unintended security risks, bias in decision-making, and loss of necessary human oversight.

An especially interesting area in this respect is the adoption of AI in the workplace. This includes novel tools for recruitment, workforce management, performance evaluation, employee monitoring, facility management, and various other adaptations in the human resources (HR) area. While AI undoubtedly has its appeal in terms of efficiency and automating routine operations, such as carrying out preliminary reviews of job applications or identifying potential misuse of company IT systems and property, such tools require a particularly careful assessment of relevant legal conditions in an EU regulatory context. Depending on the specific tool and its functions, typical legal requirements to consider include the new EU AI regulation as well as EU and national data protection and employment law.

In addition to being an employer’s legal obligation, carrying out sufficient and transparent compliance reviews of AI tools is often key in ensuring continued trust and dialogue at the workplace. For example, where any AI-based employee monitoring measures are considered, employees are often aware of and may invoke their privacy and similar rights if they are not convinced that the intended measures are appropriate and lawful.

Despite various genuine compliance concerns surrounding workplace AI, it is important to note that legal concerns over AI are also easily exaggerated. In many ways, AI is simply a new form of technology. Organizations have gone through numerous waves of technological development and new innovative tools, and, in a sense, modern AI can be considered a new phase in this continuum. There is nothing inherently problematic in AI from a compliance perspective. Therefore, when developing and implementing AI compliance programs, organizations’ focus should always be on what envisaged AI tools actually do, access and impact in an organization, and not the notion of AI itself. Ultimately, this functional perspective is the most efficient way to identify relevant legal implications of AI in a practical situation, also when examining AI solutions in the workplace.

Ensuring compliance through a use case approach

For compliance professionals responsible for the adoption of AI, the typical challenge is the overwhelming uncertainty of where and how to begin. AI is ‘nothing and everything’ in the sense that practically any process and tool can be supplemented with AI features. As mentioned above, in the workplace context covered in this article, potential AI uses include a variety of HR processes spanning from recruitment to workforce management and even employee monitoring. How do you build a sustainable AI compliance program and approach, which efficiently addresses and encompasses such diverse AI applications? In an attempt to govern AI comprehensively, many organizations find themselves drawing up overly broad, abstract, and one-size-fits-all principles and policies on AI. While this may be a starting point, such frameworks often fall short of providing sufficiently concrete guidance, for example to HR managers, to determine what kind of AI tools may be adopted and under what preconditions. At the same time, overly burdensome processes are a sure way to block AI adoption, which could have a critically negative impact on business competitiveness.

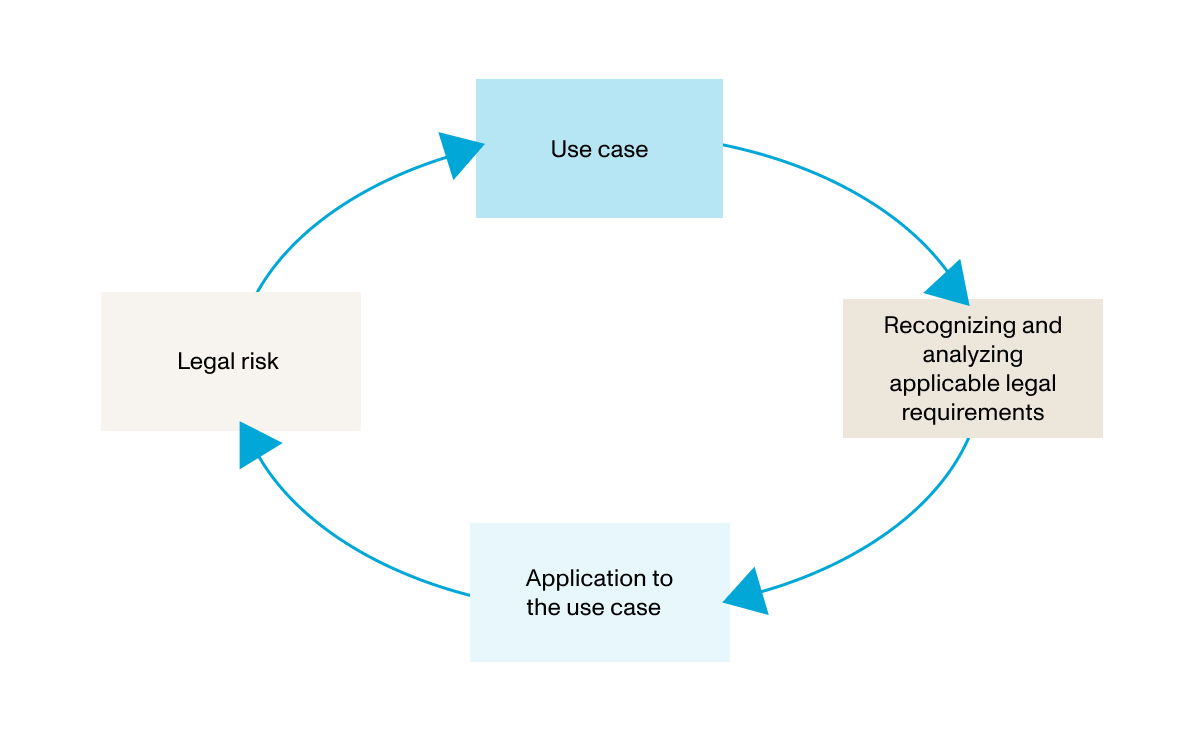

With these challenges in mind, the key characteristic in a sound and compliant adoption of workplace AI is, in our experience, applying a rigorous use case approach. What this means, in practice, is that AI compliance and governance work is based on selecting most relevant and topical AI use cases in an organization and subjecting them to a thorough legal analysis to determine applicable statutory requirements, their impact on the use case and conclusions on the feasibility of and potential adaptations to the AI use case. In essence, applying a use case approach typically relies on the following stages:

i. Selecting the use case(s)

AI compliance efforts should focus on AI use that is identified as truly relevant and imminent for the organization. When selecting AI use cases for further legal analysis, it is essential to ensure that all relevant details of the use case, its scope and purposes are clearly defined. This usually requires input from various stakeholders (for example, HR managers, IT personnel, employees and/or employee representatives etc.). Ask yourself and the team: What benefits are we seeking through AI? What would the AI actually do? Would the AI tool be used in decision-making regarding employment relationships? Would the AI tool process employee personal data? Would employees’ electronic communications be impacted? Such details are essential for determining the relevant legal requirements (step (ii) below).

ii. Recognizing and analyzing applicable legal requirements

Following a careful definition of the AI use case (step (i) above), the assessment should proceed to recognizing the legal requirements, which are triggered in each use case. In the context of HR AI tools, the ‘check list’ of regulatory requirements to be considered typically includes at least the following:

- Classification under the AI Act:

European organizations adopting AI must observe the EU’s Artificial Intelligence Act (AI Act), which entered into force on 1 August 2024. Although the AI Act enters into application in a phased timetable (for the most part applying as of 2 August 2026), many organizations are treating the AI Act as already applicable to ensure that their AI tools are adopted in a sustainable manner and not subject to legal uncertainty going forward. The AI Act is based on the notion of four distinct risk categories of AI systems, i.e., unacceptable, high, limited, and minimal risk, and imposes requirements on an AI system based on its classification within these categories. In the context of workplace AI, the AI Act specifically recognizes certain employment-related AI systems as ‘high risk’ (section 4 of Annex III of the AI Act) and, accordingly, imposes stringent preconditions on their use. It is important, however, to note that not all HR-related AI tools will fall under this highly regulated category. However, where an employer intends to rely on AI in recruitment processes, performance evaluations or deciding on promotions or disciplinary actions, the high-risk category is easily triggered. - Data protection requirements:

AI tools in the workplace often entail processing of employees’ personal data, for example names, employment details, IT credentials, communications and online activity. Such processing of personal data must comply with the EU’s General Data Protection Regulation (GDPR). This includes ensuring that the processing has a legal basis (Article 6), is compatible with the initial purposes of processing (Articles 5(1)(b) and 6(4)), is transparent towards the employees (Articles 5(1)(a), 13, and 14), and is subject to a separate data protection impact assessment (DPIA), as necessary (Article 35). It is important to note that, on top of the GDPR, certain EU member states may have additional national laws and regulations specifically governing employee privacy (Article 88 of the GDPR, ‘Processing in the context of employment’). For example, in Finland, the Act on the Protection of Privacy in Working Life sets out various additional requirements on the processing of employees’ personal data, which may also impact the feasibility of AI tools in the workplace. - Specifically regulated data assets:

Depending on the specific use case, modern AI tools may impact data types that are subject to heightened protection under applicable law. From an EU and, especially, a Finnish law perspective, such data types include location data and data relating to electronic communications. For example, an AI tool measuring office attendance may be considered to track the location of employees. In addition to general privacy concerns under the GDPR, local law (such as the Act on Electronic Communications Services in Finland) may impose additional requirements on such tracking. In turn, AI-based data security tools aiming to recognize and react to suspicious activity on company systems and devices may require that confidential employee communications (such as emails and Teams messaging) are accessed. In these cases, it is important to ensure that mandatory legal requirements are observed before such specifically protected data is impacted by an AI tool. - Employment law implications:

The adoption of AI in HR processes may naturally have various employment law implications as well. In particular, where AI is used to support recruitment decisions, performance evaluations or even disciplinary actions, employment law restrictions may apply. A typical concern with AI is whether AI could lead to bias in decision-making and, therefore, entail discriminatory practices. In addition, it is important to note that taking AI into use may also require employee consultation and/or that certain notification procedures are abided by.

iii. Application to the use case

The identification of applicable legal requirements is only one part of the legal analysis. Often, the more complex step is determining how the identified requirements should be implemented in practice. For example, how should employees be informed of the AI-related data processing in accordance with the GDPR? Or what measures could be taken to prevent the AI tool from being considered high risk under the AI Act? How should necessary consents be obtained? These questions may considerably impact the practical roll out of the relevant AI solution.

iv. Identification of legal risk (and potential adjustment of the use case)

Identifying and applying legal requirements to a new technological solution is always a balancing act. Especially when it comes to new regulations and technologies, there is always a certain level of uncertainty as to what is deemed compliant. Therefore, it is not always clear where the line between ‘right’ and ‘wrong’ should be drawn. As a result, an organization must always determine what legal risks remain and, ultimately, whether this risk is within the organization’s risk appetite or not. Ultimately, such assessments and their bases may evolve over time and may even result in reshaping the original use case in order to attain a compliant solution.

As is clear from the above, the described use-case-based method is a principled approach, and not a strict process. It can be applied in many shapes and forms of AI governance and compliance work, be it through AI governance committees or other frameworks. Despite the specific processes and mechanisms applied, it appears that companies focusing their compliance efforts on clearly defined and effective AI use cases can most efficiently leverage AI’s potential while minimizing legal risks.

Broader impact through benchmarking

An understandable criticism against a use case approach is that an in-depth legal analysis of each and every potential AI use case in an organization is simply not feasible. This is, of course, often true.

However, applying a use case approach does not mean applying a separate, comprehensive legal analysis to each individual AI tool or each version of a previously adopted AI solution. Compliance processes must always be somewhat selective and, for example, include thresholds and other methods to ensure focus remains on the most important issues. The use case approach described in this article is specifically geared towards analyzing most relevant and imminent use cases to provide a foundation for meaningful AI adoption in an organization.

More importantly, the selected and more rigorously evaluated use cases can often be leveraged as benchmarks for similar future use cases. This means that a new envisaged AI tool will not require a thorough analysis on its own but, instead, can be more briefly checked against a previous use case analysis.

Tuning out the static

Ensuring compliant AI systems in the workplace and designing relevant legal evaluation processes is no straightforward task. The most appropriate method will always depend on the specific organization, its operational context, and its approach to AI in general.

However, a regrettable trend in ongoing discussions on AI and relevant compliance approaches is the tendency of certain commentators to challenge and dismiss established compliance approaches. Such critics often refer to companies’ alleged misconceptions and blind spots in AI governance while declaring how things ‘should’ be done. This rhetoric can often hamper constructive dialogue and shift the focus to debating (and being right) instead of fruitful knowledge exchange.

Arguably, it is sometimes important to tune out the static. Critical views can and should be considered, but it is rarely beneficial to join in the panic. Organizations typically have established compliance programs and approaches that are tailored to the organization, its business and its functions. Often, the best expert on ‘what works’ is the organization itself.

Our team is happy to discuss any questions you may have regarding the compliant adoption and use of AI tools in the workplace.

Contact authors