Shake-up to EU privacy, data, and AI rules

On 19 November 2025, the European Commission published proposals for the so-called Digital Omnibus Regulation and the Digital Omnibus on AI Regulation (collectively referred to as the Digital Omnibus). The Digital Omnibus is a widely debated proposal, which represents an ambitious effort to streamline digital regulation and reduce regulatory costs in the EU. It is a part of the Commission’s broader objective to simplify EU legislation and improve the competitiveness of the EU market.

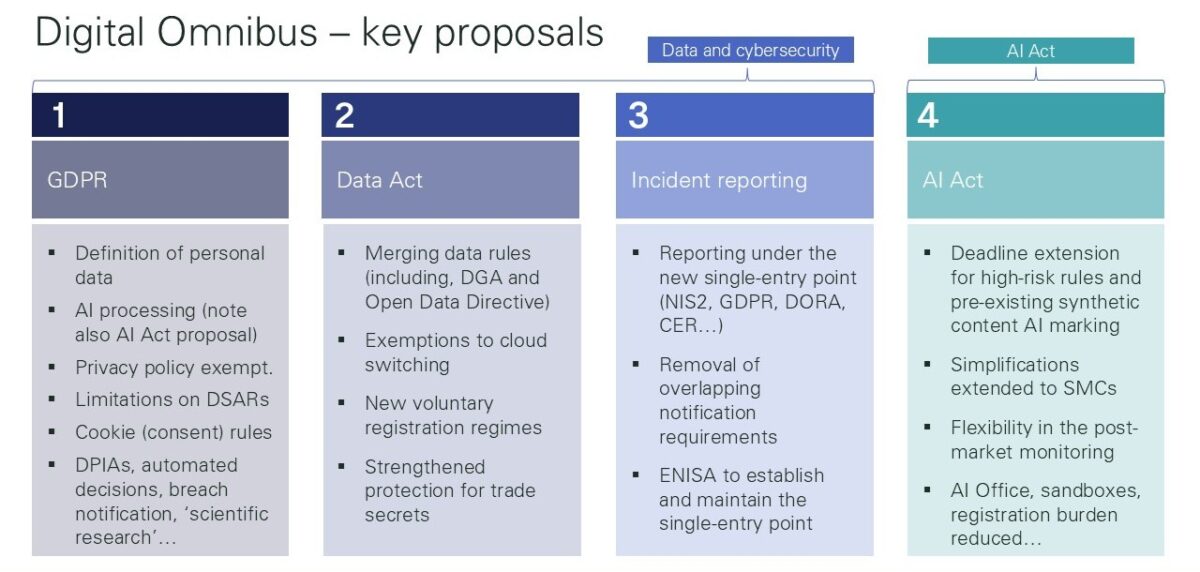

Overall, the Digital Omnibus is exceptional in both its scope and approach. It proposes material amendments to key pieces of existing privacy, data, and artificial intelligence (AI) regulation, namely the General Data Protection Regulation (GDPR), Data Act, Artificial Intelligence Act (AI Act), as well as certain related acts. Notably, the proposal also seeks to amend certain regulatory frameworks that, although currently in force, have not yet become applicable, thereby representing an unusual legislative intervention at an early stage of implementation.

In this article, we focus on what the changes set out in the Digital Omnibus would mean for data protection compliance in the EU.

The Digital Omnibus at a glance

In terms of the GDPR, the Digital Omnibus sets out various amendments aimed at lightening the data protection compliance burden. Proposed amendments include adjustments to the definition of personal data itself, exemptions to transparency and information obligations, and increasing the threshold and deadline for data breach notifications. New flexibilities would also be introduced to better allow for AI-related processing of personal data. The proposal also aims to reduce so-called cookie fatigue by providing alternative legal bases to consent for certain tracking technologies, requiring single-click buttons for cookie refusal, and supporting centralised online methods for providing consent to cookies.

The recently applicable Data Act would also be subject to various changes. These include merging certain previously scattered data governance rules (the Data Governance Act, the Open Data Directive and the Free Flow of Non-Personal Data Regulation) into the Data Act, providing exemptions to cloud switching rules, and introducing voluntary registration regimes. The proposal would also increase the protection for trade secrets in certain statutory data sharing contexts.

For the AI Act, the Digital Omnibus would extend the implementation timeline for high-risk AI rules as well as the so-called watermarking requirements for AI systems generating synthetic content. The proposal would also increase the availability of certain reliefs to compliance requirements (extending to small mid-cap enterprises in addition to small and medium-sized enterprises), and strengthen and centralize authorities’ roles in supporting compliance.

An overview of the key elements of the Digital Omnibus is presented in the table below:

What does this mean for data protection compliance in the EU?

While any given element of the Digital Omnibus would warrant further examination, a particularly interesting perspective, and the focus of this article, is what the proposed changes would mean for data protection compliance in the EU. Many changes proposed under the Digital Omnibus may, ultimately, have rather sector-specific impacts. In turn, as is typically the case with the GDPR, the proposed changes regarding data protection would essentially impact every European company processing personal data – in practice, meaning every European company.

Out of the potential GDPR changes, three of the proposed reforms appear particularly relevant for future data protection compliance: the adjusted definition of personal data, further flexibility for processing personal data in the context of AI, and the introduction of new limitations on data subject access requests. For each reform, we examine below the current regulatory position, the proposed amendment, and the practical impact of the changes on businesses, and their compliance efforts.

Adjustment to the definition of personal data

Current situation: Under Article 4(1) of the GDPR, personal data is defined as any information relating to an identified or identifiable natural person. A natural person can be identified if they can be singled out, for example by reference to a name, ID number, location data, online identifier, or other factors specific to them. As supported by EU case law, the concept of personal data is considerably broad. This has meant that many forms of even technical, pseudonymised, or encrypted data may be considered personal data, thereby requiring full compliance with the GDPR.

Proposed amendment: The definition of personal data would be limited. Information would not be considered personal data for a given entity when it cannot reasonably identify the natural person to whom the information relates. Moreover, merely the ability of another entity to identify the natural person would not be considered sufficient to make the information personal data. Identifiability of data would, therefore, be assessed from a more subjective perspective instead of considering (objective) possibilities of identification in a broader ecosystem.

Practical impact: The proposed amendment to the concept of personal data would have considerable impacts for organisations, potentially allowing them to avoid the application of the GDPR in many situations. Key examples include that, in certain ICT services, it might no longer be necessary to execute data processing agreements (GDPR Article 28) if a service provider does not itself have the means to identify individual persons from the data it processes. Certain cross-border data routing arrangements could also benefit from the more limited personal data concept if, consequently, the burdensome requirements on international transfers of personal data (GDPR Chapter V) would be avoided.

Personal data in AI

Current situation: As we all know, processing personal data requires a legal basis. One of the most commonly used legal bases is the data controller’s or a third party’s legitimate interest (GDPR Article 6(1)(f)). The legitimate interest basis may be relied upon subject to a case-by-case balancing test between the controller’s and data subject’s rights and interests. Stricter rules apply to special categories of personal data, such as health data, which are subject to a general prohibition on processing unless an exception applies (GDPR Article 9). The development and operation of AI, particularly large language models and generative AI, may often involve vast datasets that may at least inadvertently contain special category data, creating compliance challenges under the current framework.

Proposed amendment: The proposal introduces two key amendments to facilitate AI-related data processing. First, it directly recognizes the development and operation of AI as a legitimate interest for processing personal data. Secondly, the proposal creates a new exception to the prohibition on processing special category data. This exception recognises that special category data may residually exist in training, testing, or validation datasets, or be retained in AI systems or models, even where such data is not necessary for the processing purpose. The exception permits this kind of inadvertent processing where the controller has implemented effective technical and organisational measures to avoid processing such data and other related safeguards throughout the AI system’s lifecycle. In addition to this change under the GDPR, the AI Act would provide a new exception to process special category data in connection with AI for bias detection and mitigation.

Practical impact: The amendment would significantly reduce the compliance burden and uncertainty for AI developers and adopters by clarifying what is deemed a legitimate interest, and creating new solutions for processing special category data in AI development and operation. Together with certain other proposed changes (including the broadened concept of scientific research and adjusted provisions on automated decisions), the proposals would create considerable legal leeway in the context of AI.

Easier refusal of data subject access requests

Current situation: Data subjects have the right to obtain confirmation on whether their personal data is processed and, where applicable, access the data and receive certain additional information on its processing (GDPR Article 15). Currently, controllers are permitted to refuse manifestly unfounded or excessive requests or charge a reasonable fee in such situations. The burden of proof rests on the controller to demonstrate that a request is manifestly unfounded or excessive. In practice, this threshold has proven difficult to meet, leaving controllers exposed to abusive and burdensome requests, especially where there is an existing disagreement in the underlying relationship (for example, burdensome requests from terminated employees or disgruntled customers).

Proposed amendment: The proposal would supplement the controller’s right to refuse or charge for data subject access requests where the data subject is considered to abuse their GDPR rights ‘for purposes other than the protection of their data’. Such cases could include the data subject provoking refusal in order to claim compensation, merely causing harm to the controller, or extracting benefits in exchange for withdrawing the request.

Practical impact: This amendment would provide controllers with greater ability and certainty to refuse or charge for data subject access requests that appear to be of an abusive nature, thereby reducing administrative burdens, costs, and even legal risks associated with responding to such requests. A particularly challenging area in access requests is typically where a disgruntled customer or ex-employee attempts to leverage their GDPR access rights to obtain contentious information or documents from a company. Such concerns would be significantly addressed if controllers could more easily refuse access requests through the proposed amendment. However, even if the proposed change becomes applicable, controllers should continue to be cautious when deciding to refuse access requests and maintain clear documentation of their related assessments, since supervisory authorities will ultimately determine whether a refusal was justified.

How to prepare?

At this stage, the process ahead for the Digital Omnibus is not fully clear. Adoption of the proposed amendments has been expected to occur in mid to late 2026 but many contentious points in the proposals could mean a later timing. On the other hand, should a so-called urgent procedure be applied, quicker adoption would be possible. Earlier adoption would be particularly important for the Digital Omnibus on AI Regulation, since its key element would be to postpone the application of certain AI Act provisions, which would otherwise apply as of 2 August 2026.

While the objectives of the Digital Omnibus have garnered broad support, particularly from the business community seeking regulatory simplification, the proposal has also faced significant criticism regarding legislative quality and compromises to individuals’ rights. The significance of the proposed amendments suggest that the legislative process will be complex and politically challenging. Consequently, the final form of any adopted amendments may differ substantially from the current proposal.

Due to the early stage of the Digital Omnibus, it could be advisable to defer any concrete implementation until there is greater clarity on the likelihood of adoption and the final content of the amendments and, at this point, focus on monitoring the adoption process.

However, there seems to be an area where ‘preparing ahead’ appears particularly beneficial: contracts. Organisations should, already at this time, keep a close eye on material contractual arrangements which could be affected by the Digital Omnibus and ensure that sufficient change management and readiness are available. In essence, this may be achieved by approaching key service providers and other contractual partners with simple questions: Would changes to the definition of personal data impact our existing (data processing) agreements? Should changes in the cloud switching rules be reflected in existing cloud service terms? Could the scope of an envisaged AI development project be broadened in light of the proposed new flexibilities? How and under what conditions and pricing would existing contracts be amended to address the proposed changes? Getting clarity on such questions is typically of vital importance where agreements are negotiated and made for long contract periods and/or affecting material ICT resources.

Our team is happy to discuss any questions you may have regarding the EU privacy, data and AI rules.

Contact authors